The First Computer Bug

On March 20, 2022, a guy walked into a CVS pharmacy in the New York City suburbs to pick up some odd-and-ends. The CVS was woefully understaffed and the shelves weren’t stocked properly, with bins of goods lying around haphazardly. The man resigned himself to leaving the store without everything on his shipping list and made his way to the self-checkout kiosk, as the store — again, it was woefully understaffed — only had one person behind the registers, and that person was busy with another task. The customer approached the kiosk only to see an error message, instructing him to remove his already-bagged items. As he hadn’t yet placed any items in the bagging area, and the previous customer hadn’t left anything behind, he was unable to use the self-check kiosk. He asked the lone employee for help and she simply said that “the machine has a lot of bugs.”

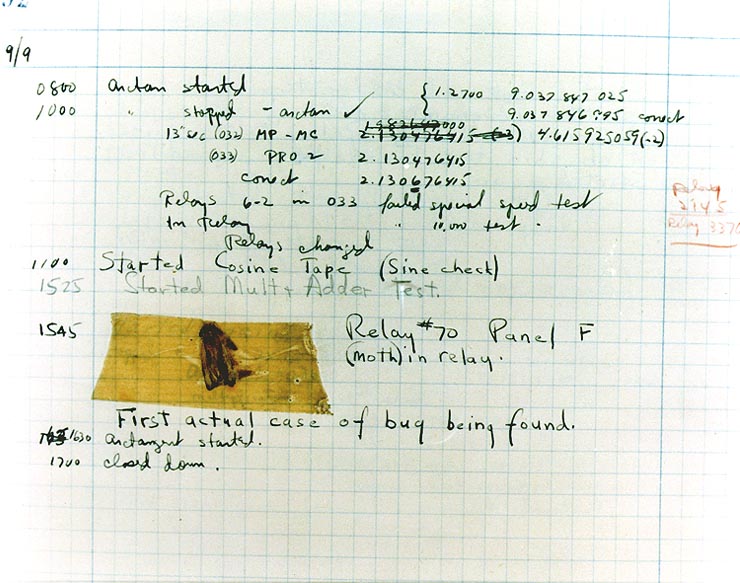

She didn’t mean it literally, but if she had, it wouldn’t be the first time, as seen below.

Computer bugs, as anyone living in the year 2022 can attest, are annoying. The machines and software that we expect to work in certain ways simply fail to do so, for reasons that are unclear to us end-users. Colloquially, that mistake is attributed to a “bug.” Despite the image above, that’s not a literal reference to any specific insect. As early as 1870, Thomas Edison noted that the “little faults and difficulties” with modern machines were often attributed to “Bugs” (he capitalized the B), and computers weren’t invented at that point in history. But the term stuck, at least in engineering circles.

Fast forward to the mid-1940s. Massive electromechanical computers entered the market, but really, they were only available to huge institutions like universities or the military. The Harvard Mark I, for example, was developed by IBM and released on August 7, 1944, and there’s really no way you’d have one in your house; it weighed more than 9,000 pounds. Its successor, the Harvard Mark II, came out three years later — and it was about five times larger.

But in September of 1947, the Mark II in use at Harvard wasn’t working right; as National Geographic reports, it “was delivering consistent errors.” Machines at the time didn’t run complicated software, so the engineering team opened up the machine to see what they could find. And what they found, as seen above, was an actual, literal bug. As Smithsonian explains, “the trapped insect had disrupted the electronics of the computer.”

Seeing the humor in the moment — and potentially, the historical significance of it as well — the engineering team took the moth and taped it to the team’s log book, noting that this is (as seen above, the “[f]irst actual case of [a] bug being found” in the history of computing. The team either worked close with or reported to future United States Real Admiral Grace Hopper, one of the pioneers of modern computing, and she took possession of the log book and shared the story far and wide. Years later, Hopper donated the log book to the Smithsonian’s Museum of American History, where it now resides. The moth, and the effort to remove it, are often credited with being the etymology for the term “computer bug” and “debugging.” That etymology is likely incorrect — see the Edison note above — but the moth and Hopper almost certainly popularized the term.

Bonus fact: The phrase “a fly in the ointment” means refers to a minor irritation that spoils an otherwise good thing, and no, it has nothing to do with computers, either — it predates them by a lot. The term comes from the Bible, and specifically, the King James translation of the book of Ecclesiastes. Ointments were used for religious purposes (for anointment), and you don’t want dead flies being part of the process. As The Phrase Finder notes, Ecclesiastes states that “dead flies cause the ointment of the apothecary to send forth a stinking savor,” rendering it unfit for use.

From the Archives: The Bug in the Plan: How a mosquito helped crack a crime caper.